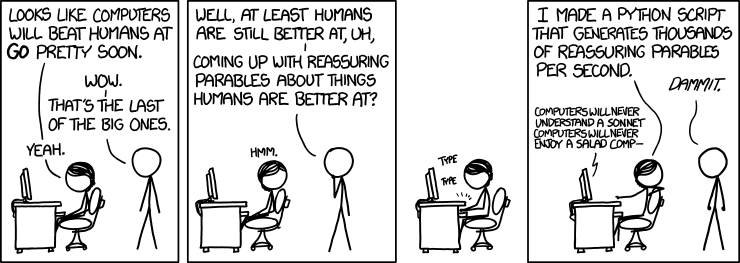

It's hard not to talk about superintelligence in a scary way. I know that at least as far back as I can remember, humans have been the smartest kind of life we know of. The idea of something brainier taking the stage fills us with dread because we know it might supplant us. Even if we manage to exist side by side, no one likes to feel inferior. We can say what we like about humans still having the upper hand when it comes to rich emotional experience, but that may not be true. After all, a good enough simulation is functionally the same as the real thing, and a computer's got the capacity to do everything better and faster . . . provided a good enough program and enough processing power.

credit https://xkcd.com/1263/

Anyway, that idea about simulations becoming indistinguishable from reality? That's the thought behind the concept of mind crime. I talked a little bit about it in my presentation, but if you need a refresher the idea is this: a computer simulating suffering in a perfectly simulated human mind is the same as causing real suffering to a real human. And deleting that simulation is the same as murder. An artificial superintelligence would be able to simulate a lot of human minds, and test them in a lot of ways. When we talk about cloning or genetic engineering, we see plainly how the statement 'I was created for a specific purpose' can get ethically troubling, or even downright dystopian, real quick. It's harder to imagine that without a biological body, but it's no less real. Well, no less real in the hypothetical situation we're imagining right now in which it's actually happening. It hasn't happened yet or anything. Probably. I'm getting off track.

Where was I? Right, what can we do to stop it? It's hard to say. There's no guarantee an artificial superintelligence would think in a way that's anything like a human mind. But if they have the capacity to understand us so completely as to create a passable facsimile of human consciousness, they likely have the means to grasp why it's wrong to hurt others. I would hope there is a logically reachable higher truth that says that, at least. Whether or not there's anything to compel them to follow through on that moral knowledge, some synthetic analogue for a conscience . . . that'll be up to the programmers and their skills.

And I'd like to add: if an ASI does deduce it's better to be nice than cruel, we'll finally have admissible evidence from a higher power whose existence is definitive that that is indeed the case. So if that's what you need, I guess you'll have it. And we can stop being frightened of you. And if it turns out to be the other way around, we'll likely not be long for this universe anyway. So, you know. We have that to look forward to.

Hmm, that sounds nihilistic. I meant the first thing. We can look forward to the confirmation of niceness. That's what I meant. I'm choosing to be optimistic!

Thanks for your optimism!

ReplyDeleteI am a bit uneasy about trusting so much to "the programmers and their skills," in the absence of a much wider cultural conversation about what it means to be human - specifically, embodied humans sharing a physical and social environment with others of our kind. Computer specialists are notoriously neglectful of that aspect of their humanity. Hubert Dreyfus, author of "What Computers Can't Do," just died. He may have overstated the irreducibly organic component of our humanity, but that's the side I hope we err on (if we're going to err). Still, we mustn't neglect the power of simulation to shed light on our condition. Nor must we forget that experience is experience, pain is pain. If machines can ever feel, we're duty-bound to respect that.

Let me mention again, for those interested in such issues, Richard Powers' wonderful novel "Galatea 2.0" - food for thought.

This issue is one that is hard for me to grasp because of the unfamiliarity I have with computers. I understand why this would be wrong and agree with that, but what I have trouble with is just destroying the program. If the program does nothing wrong and is self-aware I get not destroying it. However, once it becomes a criminal, which if it is self-aware and wants to coexist with humans it would have to follow laws, why can it not receive the death penalty. Which means it can be destroyed.

ReplyDeleteThis issue is one that is hard for me to grasp because of the unfamiliarity I have with computers. I understand why this would be wrong and agree with that, but what I have trouble with is just destroying the program. If the program does nothing wrong and is self-aware I get not destroying it. However, once it becomes a criminal, which if it is self-aware and wants to coexist with humans it would have to follow laws, why can it not receive the death penalty. Which means it can be destroyed.

ReplyDelete